Building a knowledge graph for biological experiments

To facilitate progress in the life sciences, let's develop a community governed place to store our experimental protocols, results, and compute instructions.

At LabDAO we are coming together to build a community-owned and curated platform to run experiments, exchange reagents, and share data. Next to a peer-to-peer marketplace to perform procurement auctions for laboratory experiments, we are thinking about ways to best structure experimental instructions, data, and downstream computation.

a knowledge graph for biological experiments

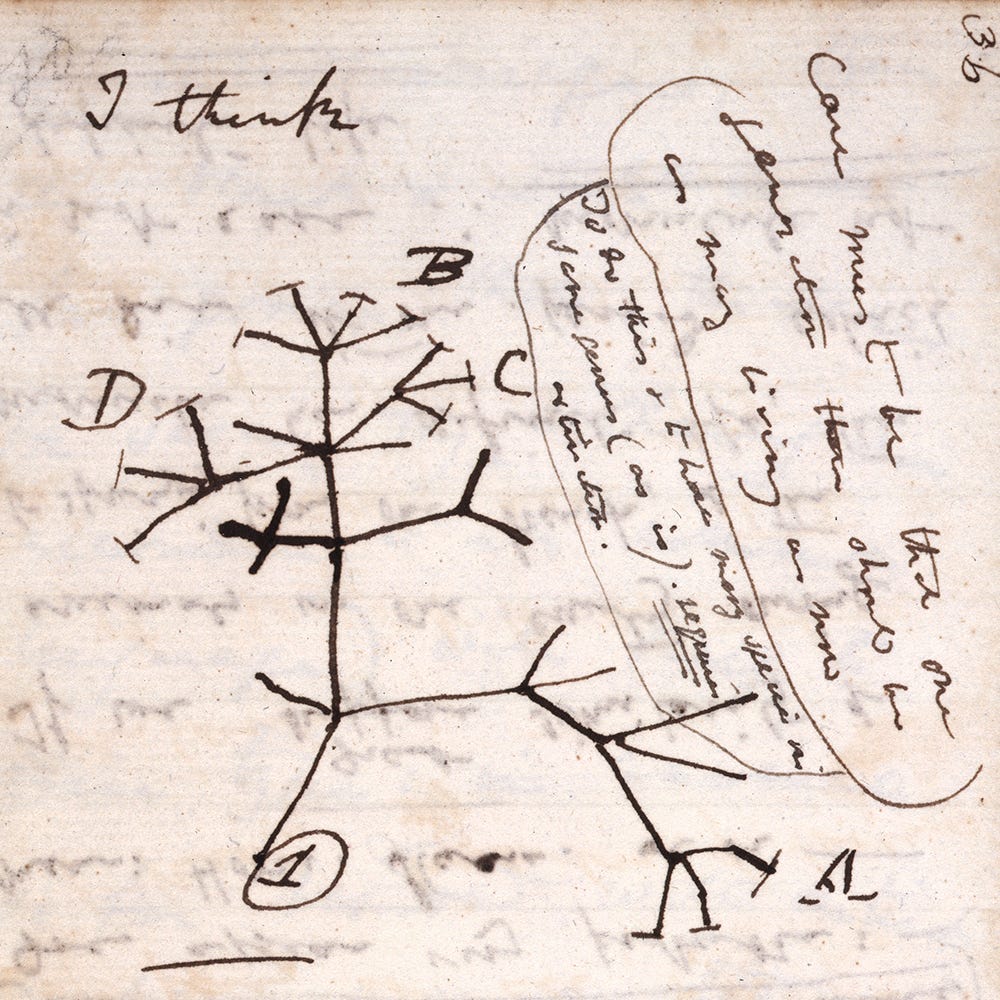

Most of human knowledge can be represented in knowledge graphs. A knowledge graph is a graph that connects different types of concepts (e.g. product, category, manufacturer, customer). In the case of experimental biological data, a basic knowledge graph consists of 5 node classes (described below). Together, I refer to them as a STEM:

the experiment - protocol name, date, the protocol designer (e.g. scientist, biotech CSO), the experimenter (e.g. CRO, core facility)

the context - incubator temperature, mouse chow originator, cage type

the biological unit - ATCC cell line id, C57BL6 strain name,

and origin

the treatment - the sequence of a CRISPRi and its target gene, a small molecule

the readout - the definition of the readout mechanism coupled with links to raw data, such as sequencing counts of a pooled CRISPR screen or the raw survival data from an in-vivo experiment

These nodes can represent entries in a graph database, each with multiple attributes. For example, the experiment node has multiple attributes such as name, date, public key of the protocol designer, public key of the experimenter, price of the experiment in case the designer and the experimenter are not the same.

By storing biological data in a knowledge graph, we can integrate it with multiple technological primitives that are being developed today:

on-chain persistence of graph data

public procurement auctions to complete subgraphs

computation as a directed graph

I will go into further detail below.

on-chain persistence of graph data

A knowledge graph is only useful if it is maintained and accessible to the community. Web3 technology enables new mechanism designs that can ensure growth, adoption, and sustainable maintenance of the knowledge graph by an incentivized community. On the technical level, the graph consists of human-readable JSON entries that define the experimental parameters, pointers to data-storage locations (e.g. IPFS ids or filecoin addresses), or public keys to messaging services (e.g. status). The graph itself can be hosted in multiple locations to guarantee uptime. Submitting a query to the graph costs tokens, while extending or curating the graph is rewarded with tokens.

public procurement auctions to complete subgraphs

In case users want to create a new entry within the knowledge graph, for example for testing a new proprietary compound, they can create a primitive entry, referred to as STEM, that defines the experiment. A STEM consists of a description of the experiment, the context, the biological unit, the treatment, and the desired readout. At the time of submission, the entry within the database contains no links to measurement data, as no experiment has been conducted yet.

The entry can also contain secrets - for example, a user can deposit a public key of a messaging service instead of describing the chemical formula of the proprietary compound in a public database.

After defining an entry, users can start a procurement auction on a peer-to-peer marketplace, where they ask service providers to execute the experiment for them and deposit the data within the graph database. Again, if the data is meant to be kept secret, the data can be encrypted. Both the secrets regarding the compound and the raw data can eventually be financialized separately using web3 primitives such as IP-NFTs.

computation as a directed subgraph

An increasing amount of biology is computational. Right now the definition of experiments, the deposition of raw data, and the computational processing are often disconnected. Experimental protocols are stored in Benchling or OneNote (and can’t be shared!), data is stored on AWS or some academic repository from the ‘90s, and computation infrastructure is handled by the internal dev team (that is often building the exact same stack as the biotech next door) or some department web server that will be turned off once funding is cut by the NIH.

Instead of thinking of these three steps as separate, they can be seen as one subgraph, where a STEM defines the experiment, data assets are linked to the STEM and downstream computational processes consume the deposited data entries by following a directed acyclic computational graph. For example, each processing step can point to a private or public API, very similar to a microservice architecture. Just like with the definition of an experimental STEM and access to raw data, web3 enabled financial markets can emerge around the access to these downstream computational APIs. These services would look a lot like a token-gated huggingface pro-plan where proceeds go to the individual maintainers and developers of the API.

why building a community-owned knowledge graph matters

By combining the three technical primitives: (1) on-chain persistence, (2) procurement auctions, and (3) joint modeling of experiment definition, result, and computation in a directed graph, I believe collective progress in the life sciences can be accelerated while maintaining the private financial interests of experiment designers (e.g. biotech founders), laboratory service providers (e.g. CRO), and computational biologists (e.g. me).

Interested in what we are up to? Send me a DM on Twitter to join our discord!